一、目的

当Hive的计算引擎是spark或mr时,发现海豚调度HQL任务的脚本并不同,mr更简洁

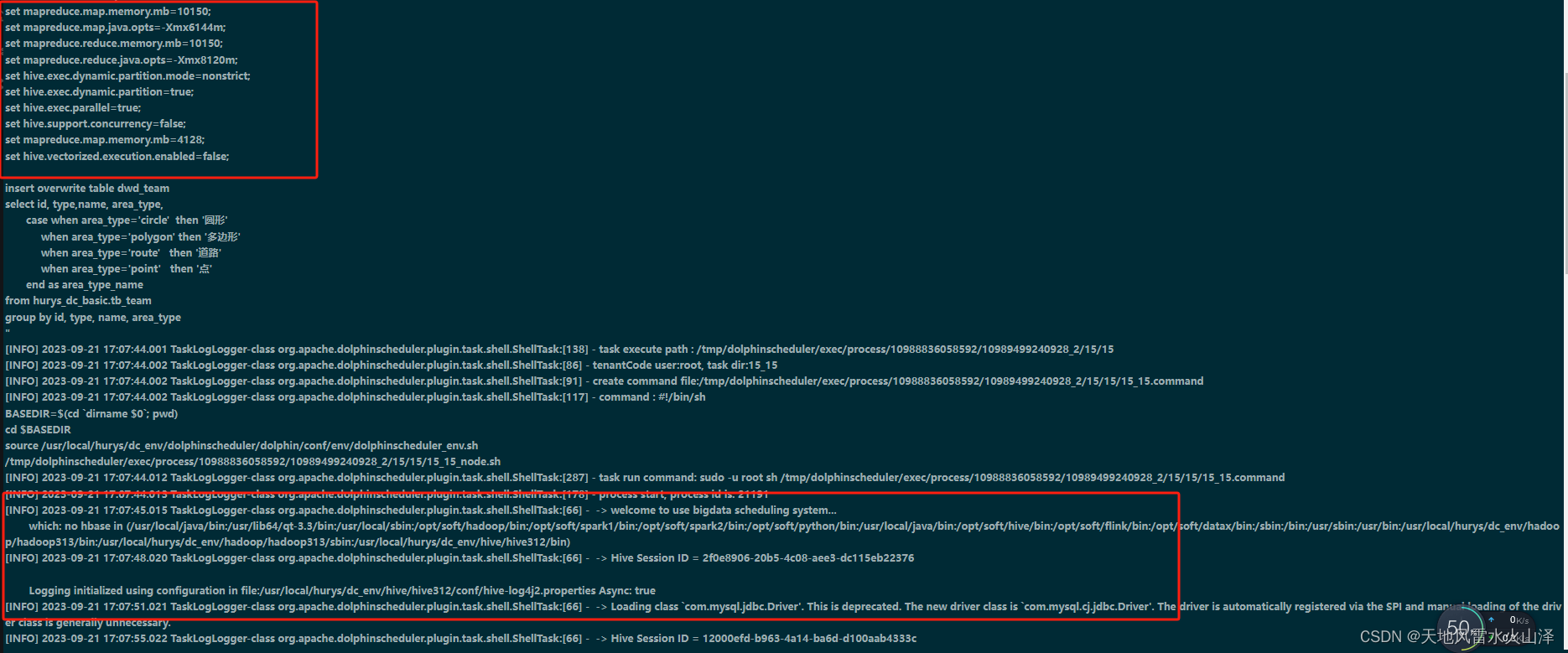

二、Hive的计算引擎是Spark时

(一)海豚调度脚本

#! /bin/bash

服务器托管网source /etc/profile

nowdate=`date –date=’0 days ago’ “+%Y%m%d”`

yesdate=`date -d yesterday +%Y-%m-%d`

hive -e “

use hurys_dc_dwd;

set hive.vectorized.execution.enabled=false;

set hive.auto.conv服务器托管网ert.join=false;

set mapreduce.map.memory.mb=10150;

set mapreduce.map.java.opts=-Xmx6144m;

set mapreduce.reduce.memory.mb=10150;

set mapreduce.reduce.java.opts=-Xmx8120m;

set hive.exec.dynamic.partition.mode=nonstrict;

set hive.exec.dynamic.partition=true;

set hive.exec.parallel=true;

set hive.support.concurrency=false;

set mapreduce.map.memory.mb=4128;

set hive.vectorized.execution.enabled=false;

set hive.exec.dynamic.partition=true;

set hive.exec.dynamic.partition.mode=nonstrict;

set hive.exec.max.dynamic.partitions.pernode=1000;

set hive.exec.max.dynamic.partitions=1500;

insert overwrite table dwd_evaluation partition(day=’$yesdate’)

select device_no,

cycle,

lane_num,

create_time,

lane_no,

volume,

queue_len_max,

sample_num,

stop_avg,

delay_avg,

stop_rate,

travel_dist,

travel_time_avg

from hurys_dc_ods.ods_evaluation

where volume is not null and date(create_time)= ‘$yesdate’

group by device_no, cycle, lane_num, create_time, lane_no,

volume, queue_len_max, sample_num, stop_avg, delay_avg, stop_rate, travel_dist, travel_time_avg

“

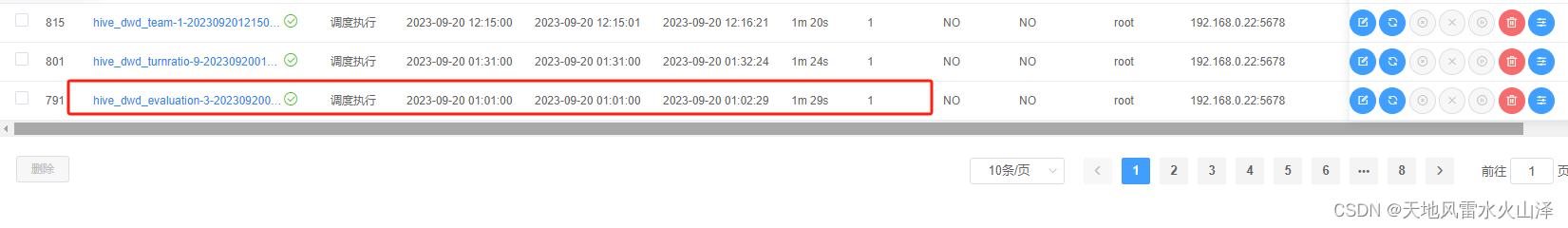

(二)任务流执行结果

调度执行成功,时间需要1m29s

调度执行成功,时间需要1m29s

三、Hive的计算引擎是MR时

(一)海豚调度脚本

#! /bin/bash

source /etc/profile

nowdate=`date –date=’0 days ago’ “+%Y%m%d”`

yesdate=`date -d yesterday +%Y-%m-%d`

hive -e “

use hurys_dc_dwd;

set hive.exec.dynamic.partition=true;

set hive.exec.dynamic.partition.mode=nonstrict;

set hive.exec.max.dynamic.partitions.pernode=1000;

set hive.exec.max.dynamic.partitions=1500;

insert overwrite table dwd_evaluation partition(day=’$yesdate’)

select device_no,

cycle,

lane_num,

create_time,

lane_no,

volume,

queue_len_max,

sample_num,

stop_avg,

delay_avg,

stop_rate,

travel_dist,

travel_time_avg

from hurys_dc_ods.ods_evaluation

where volume is not null and date(create_time)= ‘$yesdate’

group by device_no, cycle, lane_num, create_time, lane_no,

volume, queue_len_max, sample_num, stop_avg, delay_avg, stop_rate, travel_dist, travel_time_avg

“

(二)任务流执行结果

调度执行成功,时间需要1m3s

四、脚本区别

计算引擎为spark时,脚本比计算引擎为mr多,而且spark运行速度比mr慢

set hive.vectorized.execution.enabled=false;

set hive.auto.convert.join=false;

set mapreduce.map.memory.mb=10150;

set mapreduce.map.java.opts=-Xmx6144m;

set mapreduce.reduce.memory.mb=10150;

set mapreduce.reduce.java.opts=-Xmx8120m;

set hive.exec.dynamic.partition.mode=nonstrict;

set hive.exec.dynamic.partition=true;

set hive.exec.parallel=true;

set hive.support.concurrency=false;

set mapreduce.map.memory.mb=4128;

set hive.vectorized.execution.enabled=false;

mr为计算引擎时任务流脚本不能添加上面这些优化语句,不然会报错

在海豚调度HiveSQL任务流,推荐使用mr作为Hive的计算引擎。

不仅不需要安装spark,而且脚本简洁、任务执行速度快!

服务器托管,北京服务器托管,服务器租用 http://www.fwqtg.net

相关推荐: Linux实操指令

一、关机重启命令

二、用户登录注销

三、用户管理

4.常用指令

五、rpm、yum和apt一、关机重启命令 shutdown -h now 立即关机 shutdown -h 1 一分钟后关机 shutdown -r now 现在重新启动计算机 halt 关机 reboot 现在重新启动计算机 sync 把内存数据同步到磁盘 注意:不管是重启还是关闭…