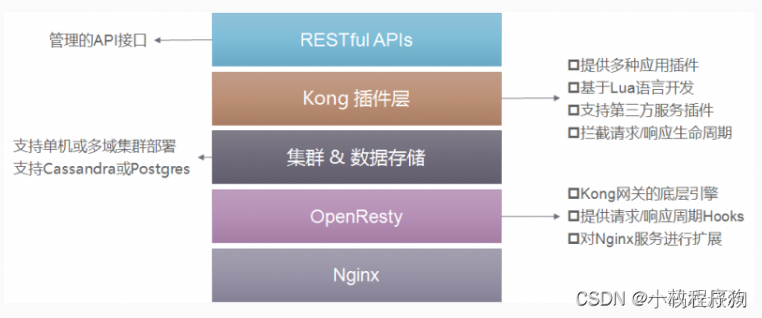

Kong网关是一个轻量级、快速、灵活的云名称API网关。Kong Gateway位于您的服务应用程序前面,可动态控制、分析和路由请求和响应。KongGateway通过使用灵活、低代码、基于插件的方法来实现您的API流量策略。 https://docs.konghq.com/gateway/latest/#features

- 架构

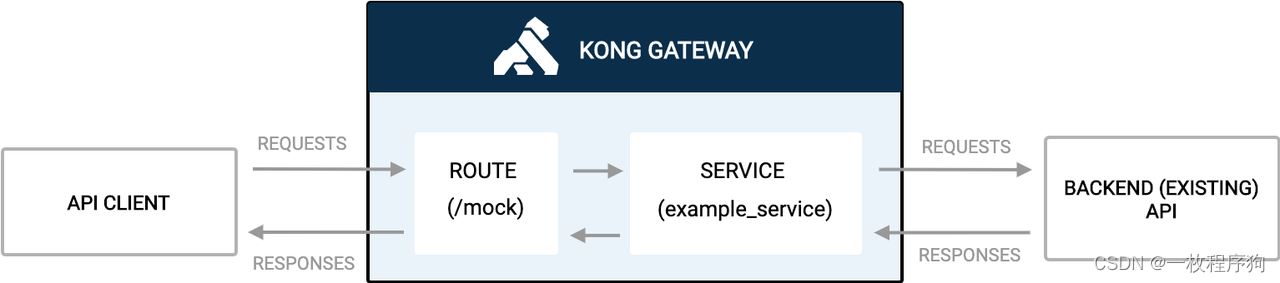

- 特性 https://docs.konghq.com/gateway/3.4.x/get-started/services-and-routes/

- 配置服务和路由

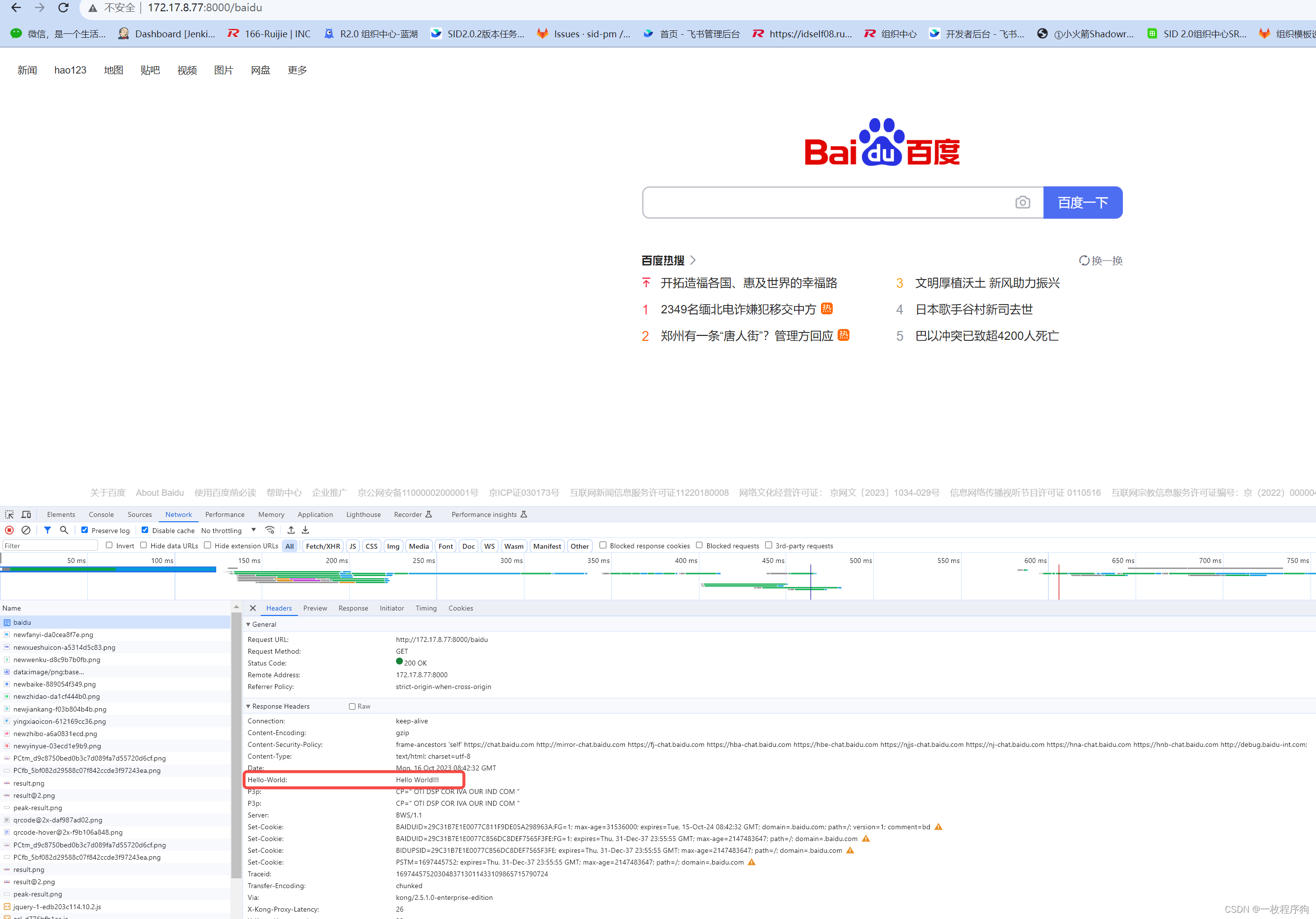

1.支持通过api和页面配置服务和路由,支持代理请求

- 配置服务和路由

sevice

curl -i -s -X POST http://localhost:8001/services

--data name=linkid_service

--data url='http://172.17.8.77:8081/linkid'

route

curl -i -X POST http://localhost:8001/services/linkid_service/routes

--data 'paths[]=/api'

--data name=linkid_route

proxy

http://localhost:8000/api/user/getUserId/{xxx}

- 配置速率限制以保护上游服务

1.支持根据service和route配置速率,防止dos攻击

curl -X POST http://localhost:8001/services/linkid_service/plugins

--data "name=rate-limiting"

--data config.minute=5

--data config.policy=local

for _ in {1..6}; do curl -s -i localhost:8000/baidu; echo; sleep 1; done

- 使用代理缓存提高系统性能

1.支持根据service和route及consumer配置缓存,为消费者创建缓存,

service

curl -X POST http://localhost:8001/services/linkid_service/plugins

--data "name=proxy-cache"

--data "config.request_method=GET"

--data "config.response_code=200"

--data "config.content_type=application/json; charset=utf-8"

--data "config.cache_ttl=30"

--data "config.strategy=memory"

route

curl -X POST http://localhost:8001/routes/linkid_route/plugins

--data "name=proxy-cache"

--data "config.request_method=GET"

--data "config.response_code=200"

--data "config.content_type=application/json; charset=utf-8"

--data "config.cache_ttl=30"

--data "config.strategy=memory"

consumer

//新建一个消费者

curl -X POST http://localhost:8001/consumers/

--data username=sasha

curl -X POST http://localhost:8001/consumers/sasha/plugins

--data "name=proxy-cache"

--data "config.request_method=GET"

--data "config.response_code=200"

--data "config.content_type=application/json; charset=utf-8"

--data "config.cache_ttl=30"

--data "config.strategy=memory"

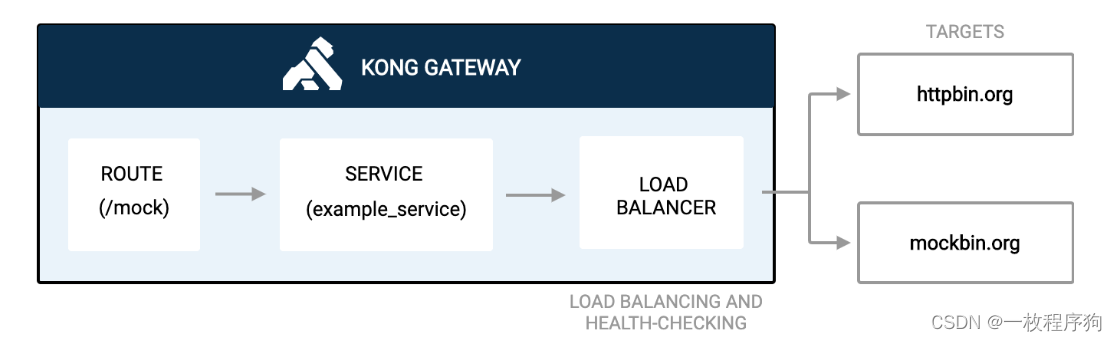

- 用于水平服务扩展的负载平衡

负载平衡是一种将API请求流量分布在多个上游服务上的方法。负载平衡通过防止单个资源过载来提高整个系统的响应能力并减少故障。

curl -X POST http://localhost:8001/upstreams

--data name=example_upstream

curl -X POST http://localhost:8001/upstreams/example_upstream/targets

--data target='mockbin.org:80'

curl -X POST http://localhost:8001/upstreams/example_upstream/targets

--data target='httpbin.org:80'

curl -X PATCH http://localhost:8001/services/example_service

--data host='example_upstream'

- 使用密钥身份验证保护服务(service级别、路由级别、全局)

1.key Authentication 支持指定一个key放到header里面才能放行

curl -X POST http://localhost:8001/services/example_service/plugins

--data name=key-auth

curl -X POST http://localhost:8001/routes/example_route/plugins

--data name=key-auth

2.basic Authentication

https://docs.konghq.com/hub/kong-inc/basic-auth/

3.oauth Authentication

https://docs.konghq.com/hub/kong-inc/oauth2/

4.LDAP Authentication

https://docs.konghq.com/hub/kong-inc/ldap-auth/

5.openId Connect

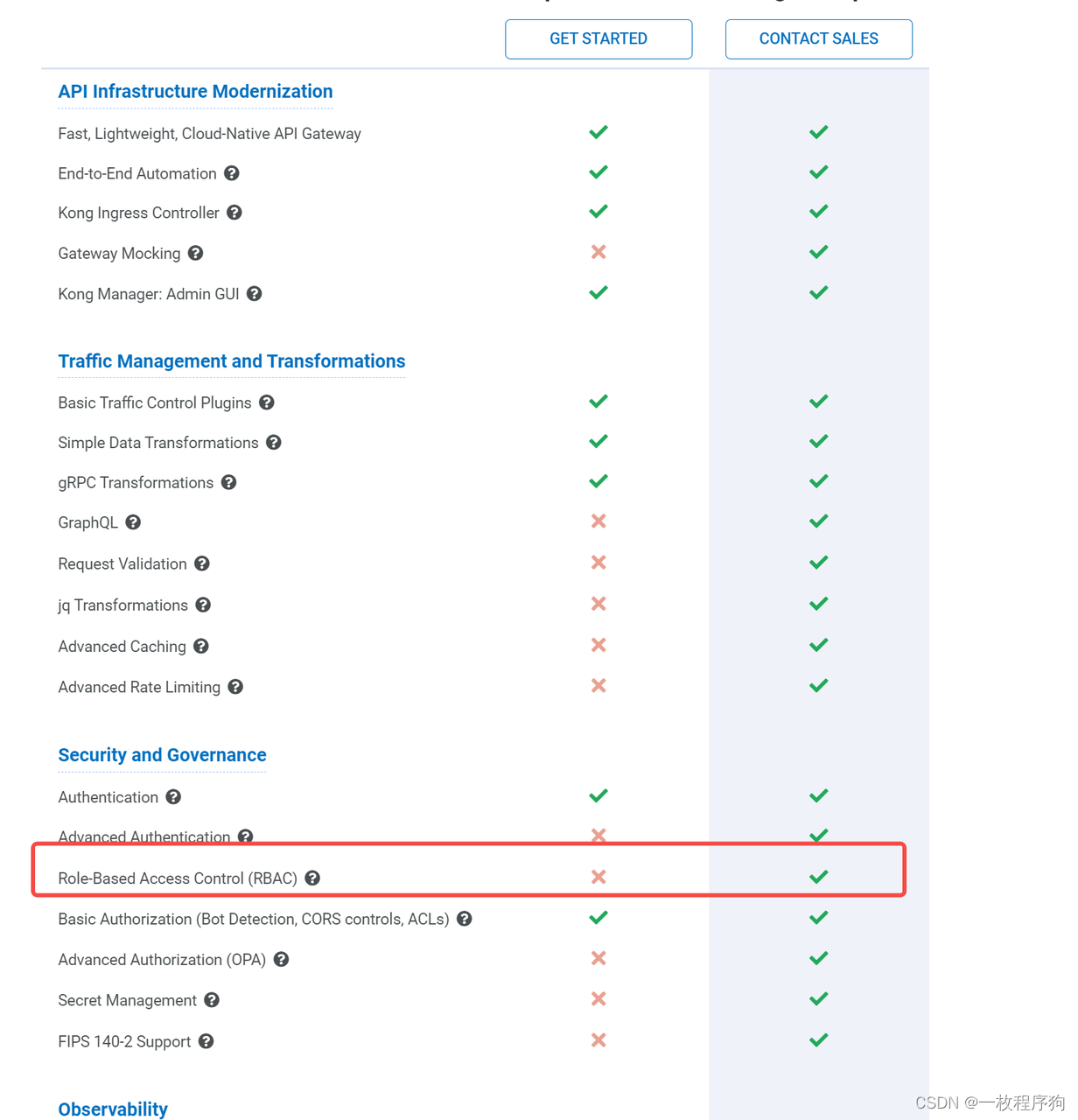

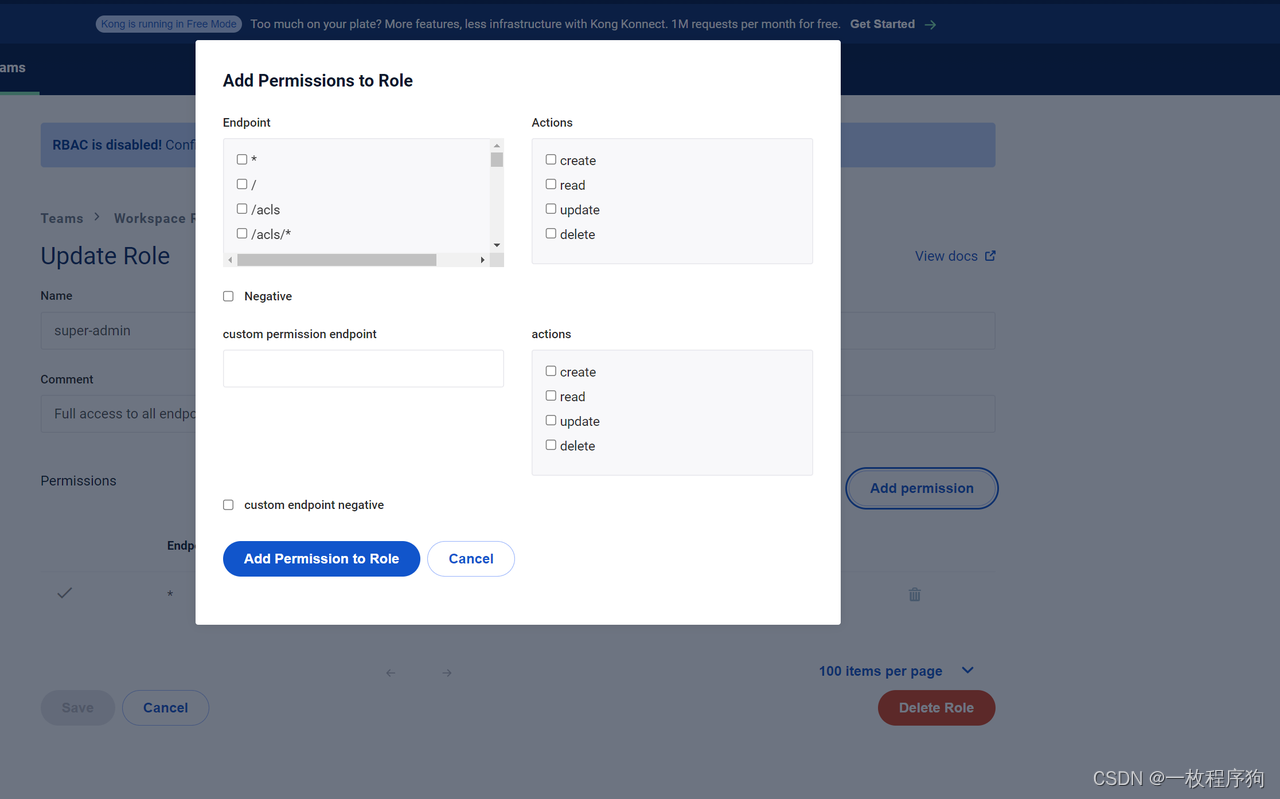

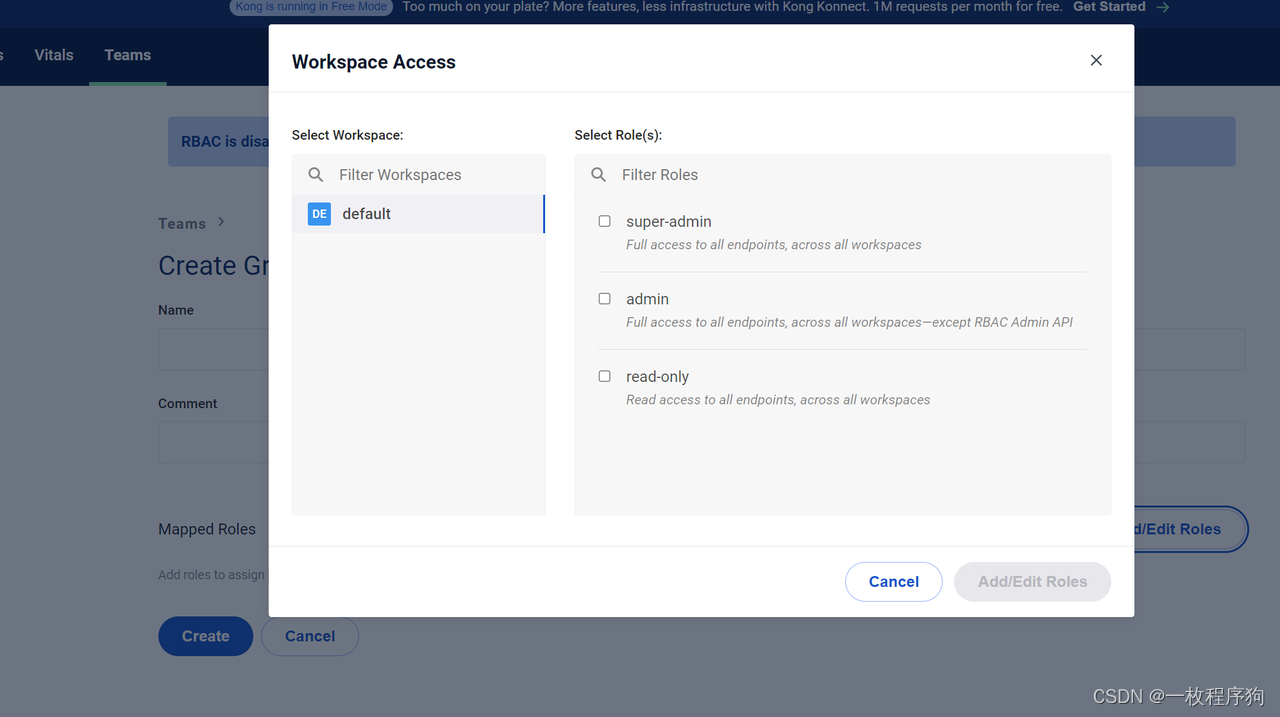

- 权限控制RBAC有工作空间和组的概念(企业版的才开放)

除了对管理员进行身份验证和划分工作区外,Kong Gateway还能够使用分配给管理员的角色,对所有资源实施基于角色的访问控制(RBAC)。

https://docs.konghq.com/gateway/3.4.x/kong-manager/auth/rbac/

-

实现

只能控制到接口层面,权限包括 createreadupdatedelete 具体什么动作,不太清楚企业版才支持。参数级别控制不到

-

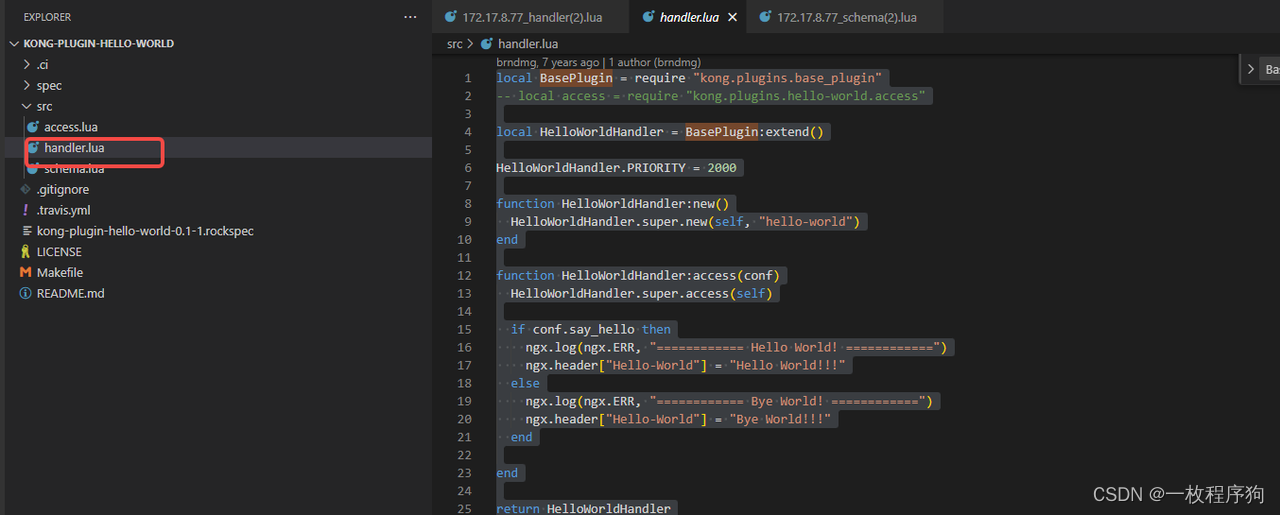

如何自定义插件

https://docs.konghq.com/gateway/latest/plugin-development/file-structure/- 结构 一定要包含 handler.lua 和schema.lua

- 结构 一定要包含 handler.lua 和schema.lua

-

如何部署插件

https://docs.konghq.com/gateway/latest/plugin-development/distribution/- 快速启动一个这个会自动构建kong的环境,但是镜像不是我们要的

curl -Ls https://get.konghq.com/quickstart | bash - 构建自己的kong镜像

- 定义entrypoint.sh

- 快速启动一个这个会自动构建kong的环境,但是镜像不是我们要的

#!/usr/bin/env bash

set -Eeo pipefail

# usage: file_env VAR [DEFAULT]

# ie: file_env 'XYZ_DB_PASSWORD' 'example'

# (will allow for "$XYZ_DB_PASSWORD_FILE" to fill in the value of

# "$XYZ_DB_PASSWORD" from a file, especially for Docker's secrets feature)

file_env() {

local var="$1"

local fileVar="${var}_FILE"

local def="${2:-}"

# Do not continue if _FILE env is not set

if ! [ "${!fileVar:-}" ]; then

return

elif [ "${!var:-}" ] && [ "${!fileVar:-}" ]; then

echo >&2 "error: both $var and $fileVar are set (but are exclusive)"

exit 1

fi

local val="$def"

if [ "${!var:-}" ]; then

val="${!var}"

elif [ "${!fileVar:-}" ]; then

val="$( "${!fileVar}")"

fi

export "$var"="$val"

unset "$fileVar"

}

export KONG_NGINX_DAEMON=${KONG_NGINX_DAEMON:=off}

if [[ "$1" == "kong" ]]; then

all_kong_options="/usr/local/share/lua/5.1/kong/templates/kong_defaults.lua"

set +Eeo pipefail

while I服务器托管网FS='' read -r LINE || [ -n "${LINE}" ]; do

opt=$(echo "$LINE" | grep "=" | sed "s/=.*$//" | sed "s/ //" | tr '[:lower:]' '[:upper:]')

file_env "KONG_$opt"

done $all_kong_options

set -Eeo pipefail

file_env KONG_PASSWORD

PREFIX=${KONG_PREFIX:=/usr/local/kong}

if [[ "$2" == "docker-start" ]]; then

kong prepare -p "$PREFIX" "$@"

# remove all dangling sockets in $PREFIX dir before starting Kong

LOGGED_SOCKET_WARNING=0

for localfile in "$PREFIX"/*; do

if [ -S "$localfile" ]; then

if (( LOGGED_SOCKET_WARNING == 0 )); then

printf >&2 'WARN: found dangling unix sockets in the prefix directory '

printf >&2 '(%q) ' "$PREFIX"

printf >&2 'while preparing to start Kong. This may be a sign that Kong '

printf >&2 'was previously shut down uncleanly or is in an unknown state '

printf >&2 'and could require further investigation.n'

LOGGED_SOCKET_WARNING=1

fi

rm -f "$localfile"

fi

done

ln -sfn /dev/stdout $PREFIX/logs/access.log

ln -sfn /dev/stdout $PREFIX/logs/admin_access.log

ln -sfn /dev/stderr $PREFIX/logs/error.log

exec /usr/local/openresty/nginx/sbin/nginx

-p "$PREFIX"

-c nginx.conf

fi

fi

exec "$@"

- 定义一个dockerFile

FROM kong/kong-gateway:latest

# Ensure any patching steps are executed as root user

USER root

# Add custom plugin to the image

# Ensure kong user is selected for image execution

USER kong

# Run kong

COPY ./entrypoint.sh /

ENTRYPOINT ["/entrypoint.sh"]

EXPOSE 8000 8002 8001 8003

STOPSIGNAL SIGQ服务器托管网UIT

HEALTHCHECK --interval=10s --timeout=10s --retries=10 CMD kong health

CMD ["kong", "docker-start"]

- 构建镜像

docker build -t kong/kong-gateway:latest .

- 定义启动的环境变量 kong-quickstart.env

KONG_PG_HOST=kong-quickstart-database

KONG_PG_USER=kong

KONG_PG_PASSWORD=kong

KONG_ADMIN_LISTEN=0.0.0.0:8001, 0.0.0.0:8444 ssl

KONG_PROXY_ACCESS_LOG=/dev/stdout

KONG_ADMIN_ACCESS_LOG=/dev/stdout

KONG_PROXY_ERROR_LOG=/dev/stderr

KONG_ADMIN_ERROR_LOG=/dev/stderr

- 加载自定义插件启动

docker run -d --name kong-quickstart-gateway --network=kong-quickstart-net --env-file "kong-quickstart.env" -p 8000:8000 -p 8001:8001 -p 8002:8002 -p 8003:8003 -p 8004:8004

-e "KONG_LUA_PACKAGE_PATH=/plugins/?.lua"

-v "/plugins:/plugins"

-e "KONG_PLUGINS=bundled,demo"

kong/kong-gateway:latest

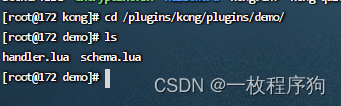

- 插件路径一定要这样

- 否则会报错

https://blog.csdn.net/cccfire/article/details/133862691?spm=1001.2014.3001.5501 - 查看插件是否加载

curl -s http://localhost:8001/plugins/enabled | grep demo

- 添加service

curl -XPOST -H 'Content-Type: application/json'

-d '{"name":"example.service","url":"http://httpbin.org"}'

http://localhost:8001/services/

- 添加路由

curl -XPOST -H 'Content-Type: application/json'

-d '{"paths":["/"],"strip_path":false}'

http://localhost:8001/services/example.service/routes

- 应用插件到路由

curl -XPOST --data "name=demo"

http://localhost:8001/services/example.service/plugins

-

查看效果,插件里面添加请求头这边就加上了

-

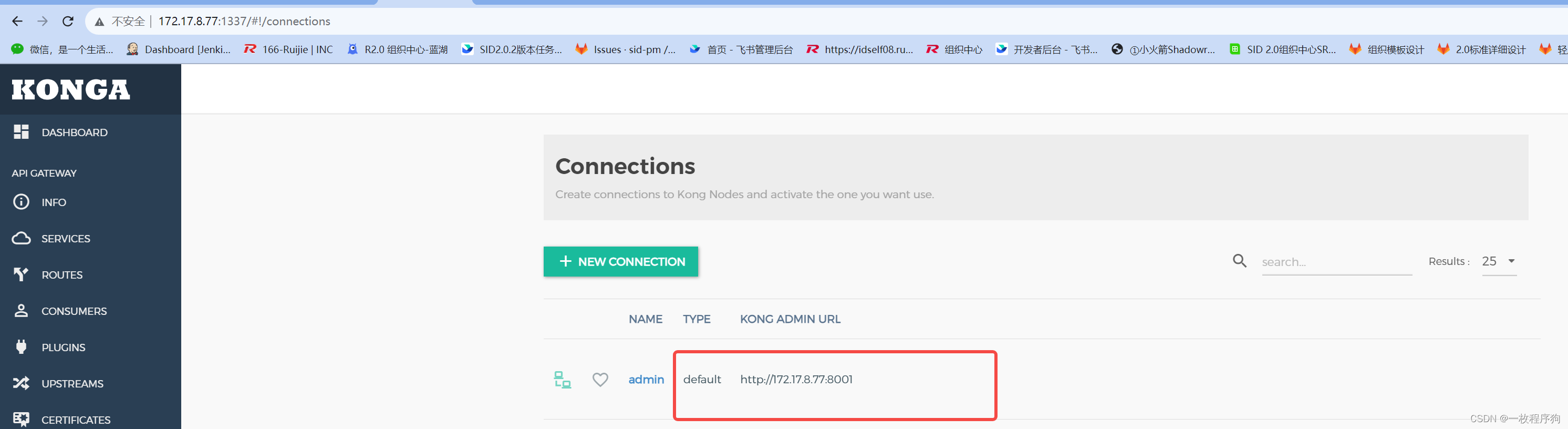

konga也有必要了解一下

- 支持管理多个kong服务

docker pull pantsel/konga:latest

docker run -d --name konga --network=kong-quickstart-net -p 1337:1337 113950dafdbb

服务器托管,北京服务器托管,服务器租用 http://www.fwqtg.net

相关推荐: [深度学习]大模型训练之框架篇–DeepSpeed使用三、调参步骤四、优化器和调度器五、训练精度六、获取模型参数七、模型推理八、 内存估计

现在的模型越来越大,动辄几B甚至几百B。但是显卡显存大小根本无法支撑训练推理。例如,一块RTX2090的10G显存,光把模型加载上去,就会OOM,更别提后面的训练优化。 作为传统pytorch Dataparallel的一种替代,DeepSpeed的目标,就是…