在配置Windows 10环境下运行YOLOv8时,报错可能有多种原因。以下是一些可能导致错误的常见原因:

- 缺少依赖项:YOLOv8可能需要一些额外的依赖项,如OpenCV、CUDA、cuDNN等。请确保你已经正确安装了这些依赖项,并且版本与YOLOv8的要求相匹配。

- 文件路径错误:检查你的文件路径是否正确。确保模型文件、权重文件以及图像或视频文件的路径都是正确的,并且你有相应的读取权限。

- 模型配置错误:YOLOv8使用一个模型配置文件来定义网络架构和超参数。确认模型配置文件是否存在以及路径是否正确。对于YOLOv8,常见的模型配置文件是

yolov3.cfg或者yolov4.cfg。 - 权重文件错误:YOLOv8需要预训练的权重文件,它包含了在大型数据集上训练过的模型参数。确保你下载了正确的权重文件,并将其保存在正确的路径上。

- GPU相关错误:如果你的系统具有GPU并且你希望使用GPU进行加速,请确保你正确安装了相应的GPU驱动程序,并且CUDA和cuDNN的版本与YOLOv8所需的版本匹配。

问题如下:

Windows10 运行 YOLOv8 出现如下错误:

Traceback (most recent call last):

File “”, line 1, in

File “D:anaconda3envsyolov8Libmultiprocessingspawn.py”, line 116, in spawn_main

exitcode = _main(fd, parent_sentinel)

^^^^^^^^^^^^^^^^^^^^^^^^^

File “D:anaconda3envsyolov8Libmultiprocessingspawn.py”, line 125, in _main

prepare(preparation_data)

…

Traceback (most recent call last):

File "", line 1, in

File "D:anaconda3envsyolov8Libmultiprocessingspawn.py", line 122, in spawn_main

exitcode = _main(fd, parent_sentinel)

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "D:anaconda3envsyolov8Libmultiprocessingspawn.py", line 131, in _main

prepare(preparation_data)

File "D:anaconda3envsyolov8Libmultiprocessingspawn.py", line 242, in prepare

_fixup_main_from_path(data['init_main_from_path'])

File "D:anaconda3envsyolov8Libmultiprocessingspawn.py", line 293, in _fixup_main_from_path

main_content = runpy.run_path(main_path,

^^^^^^^^^^^^^^^^^^^^^^^^^

File "", line 291, in run_path

File "", line 98, in _run_module_code

File "", line 88, in _run_code

File "F:workspaceyolov8train.py", line 8, in

results = model.train(data="fsd.yaml", epochs=200, batch=16)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "D:anaconda3envsyolov8Libsite-packagesultralyticsenginemodel.py", line 377, in train

self.trainer.train()

File "D:anaconda3envsyolov8Libsite-packagesultralyticsenginetrainer.py", line 192, in train

self._do_train(world_size)

File "D:anaconda3envsyolov8Libsite-packagesultralyticsenginetrainer.py", line 294, in _do_train

self._setup_train(world_size)

File "D:anaconda3envsyolov8Libsite-packagesultralyticsenginetrainer.py", line 259, in _setup_train

self.train_loader = self.get_dataloader(self.trainset, batch_size=batch_size, rank=RANK, mode='train')

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "D:anaconda3envsyolov8Libsite-packagesultralyticsmodelsyolodetecttrain.py", line 40, in get_dataloader

return build_dataloader(dataset, batch_size, workers, shuffle, rank) # return dataloader

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "D:anaconda3envsyolov8Libsite-packagesultralyticsdatabuild.py", line 101, in build_dataloader

return InfiniteDataLoader(dataset=dataset,

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "D:anaconda3envsyolov8Libsite-packagesultralyticsdatabuild.py", line 29, in __init__

self.iterator = super().__iter__()

^^^^^^^^^^^^^^^^^^

File "D:anaconda3envsyolov8Libsite-packagestorchutilsdatadataloader.py", line 441, in __iter__

return self._get_iterator()

^^^^^^^^^^^^^^^^^^^^

File "D:anaconda3envsyolov8Libsite-packagestorchutilsdatadataloader.py", line 388, in _get_iterator

return _MultiProcessingDataLoad服务器托管网erIter(self)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "D:anaconda3envsyolov8Libsite-packagestorchutilsdatadataloader.py", line 1042, in __init__

w.start()

File "D:anaconda3envsyolov8Libmultiprocessingprocess.py", line 121, in start

self._popen = self._Popen(self)

^^^^^^^^^^^^^^^^^

File "D:anaconda3envsyolov8Libmultiprocessingcontext.py", line 224, in _Popen

return _default_context.get_context().Process._Popen(process_obj)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "D:anaconda3envsyolov8Libmultiprocessingcontext.py", line 336, in _Popen

return Popen(process_obj)

^^^^^^^^^^^^^^^^^^

File "D:anaconda3envsyolov8Libmultiprocessingpopen_spawn_win32.py", line 45, in __init__

prep_data = spawn.get_preparation_data(process_obj._name)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "D:anaconda3envsyolov8Libmultiprocessingspawn.py", line 160, in get_preparation_data

_check_not_importing_main()

File "D:anaconda3envsyolov8Libmultiprocessingspawn.py", line 140, in _check_not_importing_main

raise RuntimeError('''

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

修改

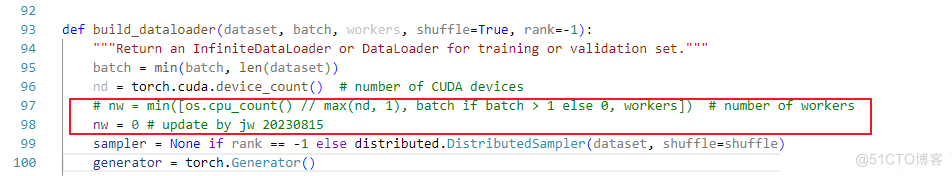

D:anaco服务器托管网nda3envsyolov8Libsite-packagesultralyticsdata 路径下的 build.py 文件里的函数 build_dataloader, 将 nw = min([os.cpu_count() // max(nd, 1), batch if batch > 1 else 0, workers]) # number of workers 直接修改为 nw=0

def build_dataloader(dataset, batch, workers, shuffle=True, rank=-1):

"""Return an InfiniteDataLoader or DataLoader for training or validation set."""

batch = min(batch, len(dataset))

nd = torch.cuda.device_count() # number of CUDA devices

# nw = min([os.cpu_count() // max(nd, 1), batch if batch > 1 else 0, workers]) # number of workers

nw = 0 # update by jw 20230815

.....

Lnton羚通是专注于音视频算法、算力、云平台的高科技人工智能企业。 公司基于视频分析技术、视频智能传输技术、远程监测技术以及智能语音融合技术等, 拥有多款可支持ONVIF、RTSP、GB/T28181等多协议、多路数的音视频智能分析服务器/云平台。

服务器托管,北京服务器托管,服务器租用 http://www.fwqtg.net

机房租用,北京机房租用,IDC机房托管, http://www.fwqtg.net

package _31_findMaxArray; import java.util.Arrays; /** * 连续子数组的最大和 * 设b[i]表示以第i个元素a[i]结尾的最大子序列, * 那么显然b[i+1]=b[i]>0?b[i]+a[i+1]…